Background Story

Sometime in January, I needed to scrape about 10k assets(images/texts) and data for some nft data project I had ongoing at the time. The data were unstructured, about 6-7 different varieties in total. At the end, I need to insert these 10k data into an excel sheet and a json file.

A manual approach to this isn’t viable, if I needed to scrape these data, I had to build a system in place that is fault tolerable due to the following:

- network latency (the network speed in my area is not to be relied on)

- power (same as network)

- accuracy (ensure the file names/descriptions matches the specific file )

- efficiency (scrape and organize in a short time)

So with these as my functional requirements, I had to come up with the best approach to solve my problem.

Webscraping 101

I use typescript with nest.js for most of my everyday project because of the familiarity and easy setup.

So let’s do some webscraping before building our queue service.

dependencies

puppeteer: for webscraping, read the docs for more info.excel-js: to convert data to excel, check docs here.@tensorflow-models/mobilenet and tensorflow/tfjs-node: build on top Tensorflow-js, for some image classification, see the docs here

let’s do some basic scraping

1 | () |

Building the queue service

N/B: They’re trade offs to these implementation. Having an isolated system for handling queue processing would be a preferred scalable solution. This takes the load off the server receiving requests. The system processes the data based on the priority, or whichever comes first. I am sharing this for the sole purpose of having to get things done quickly, with no other incurred cost like so. This is not the best implementation out there.

Typical systems used by big tech includes:

- RabbitMq

- Apache Kafka

- Google Cloud Pub/Sub

- Amazon MQ others includes aws sns and aws sqs

- Redis

There are two separate approaches i’d talk about in this post. The baseline logic emulates how a queue operates FIFO: First In First Out, or selection based on level of priority etc.

The second one, utilizes postgres as our database engine. The database handle retrieval of the data, and process it for queuing operation.

1. Using basic queue data structure

1 |

|

with this, we can run the operation based on the number of batches or data needed to be queried or so.

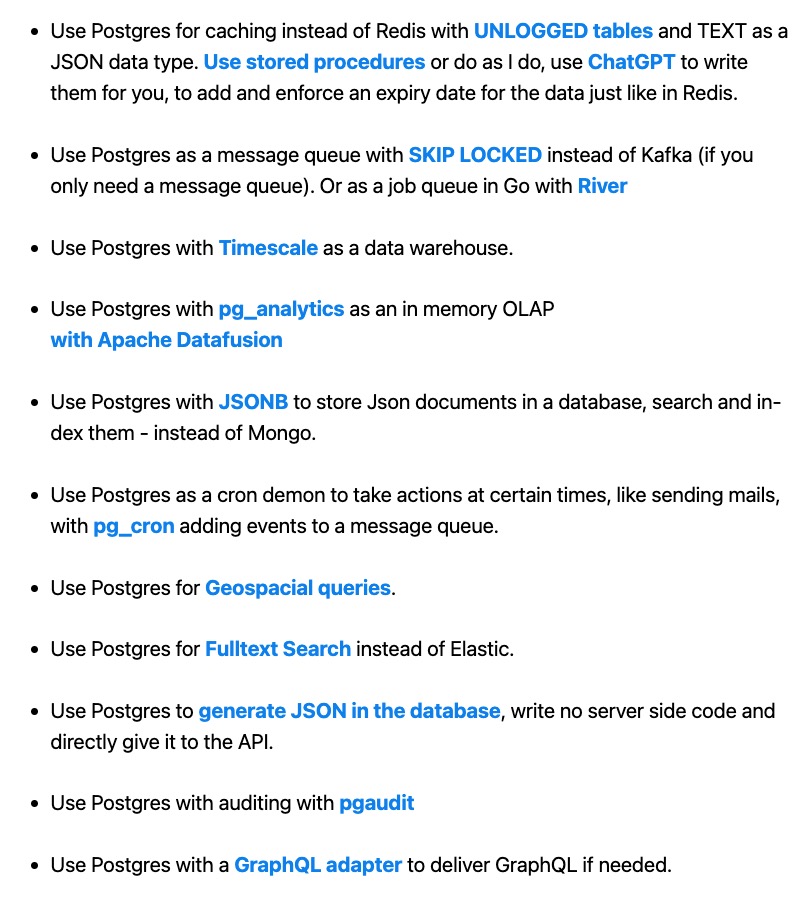

2. Using Postgres with SKIP LOCKED

postgres, I use postgres for everything. -

unknown author

postgres can handle if not all, of the weight be thrown at. The image above, affirms the many reason why one should choose postgres. Although, not entirely true are they could be pitfalls. Some application are well suited for certain use cases.

I have used postgres for async queue processing, and it delivered just well for my needs.

How SKIP LOCKED works under the hood

Skip locked is a feature added in postgres 9.5 mainly for handling async queue processing to solve locking issues related with relational databases, for a guaranteed safety between transactions.

some advantages includes:

- improved performance

- efficiency for atomic commits

- avoid deadlocks

- acid gracefully

when not to use skip locked:

- ordering is important (could be negligible if data has a created date tag)

- when all rows must be processed

- long running transactions

- when retry mechanism is not considered

1 | BEGIN; |

- starts a transaction with the BEGIN statement

- selects an order where processed == false

- FOR UPDATE skips any row that is currently in use by another transaction or query

- LIMIT 20 limits to processing 20 rows per transaction

- COMMIT the transaction after

adding retry mechanism or batch processing

dependencies

typeormorm for nodepgpg instance for nodenestjsframework interfaced with express or fastify

1 |

|